Conversion Experiences

Saul, so we’re told, had his conversion experience on the road to Damascus. Astronomers had theirs in labs and machine shops starting in the 1960s.By this, I mean that astronomers developed tools and instruments to convert traditional photographs – made of glass and emulsion – to digital zeros and ones. This began a process that would completely reshape how astronomers work. Once data was in a digital format it could move about more easily. Astronomers could work across wavelengths and share data they collected with different telescopes. To borrow a fashionable word from history, astronomy could become more transnational as data circulated not only across desktops but across national borders. But first it had to be digital. One of the most innovative tools for taking astronomical photographs and converting them to digital format originated in the mid-1960s with a graduate student at Cambridge University.In 1970, Ed Kibblewhite was a 26 year-old just a few months away from filing his dissertation. Like many in his professional cohort, Kibblewhite had moved into astronomy from another field; in this case, electrical engineering. In 1966, Kibblewhite proposed building an “automatic Schmidt reduction engine” for his dissertation.

But first it had to be digital. One of the most innovative tools for taking astronomical photographs and converting them to digital format originated in the mid-1960s with a graduate student at Cambridge University.In 1970, Ed Kibblewhite was a 26 year-old just a few months away from filing his dissertation. Like many in his professional cohort, Kibblewhite had moved into astronomy from another field; in this case, electrical engineering. In 1966, Kibblewhite proposed building an “automatic Schmidt reduction engine” for his dissertation. As Kibblewhite’s proposal suggested, its prime application was analyzing images taken at Schmidt telescopes, instruments whose optics are designed to take in much wider fields of view compared with traditional reflecting telescopes. To understand the data challenge posed by these large-scale survey telescopes, consider their output. The photograph itself is a negative; bright objects like nearby stars show as dark black spots while galaxies are fainter and fuzzier. A typical exposure recorded might contain as many as one million astronomical objects.Kibblewhite’s graduate advisor approved his plan and, for the next five years, he designed and built what eventually became the Automated Photographic Measuring facility (or APM).

As Kibblewhite’s proposal suggested, its prime application was analyzing images taken at Schmidt telescopes, instruments whose optics are designed to take in much wider fields of view compared with traditional reflecting telescopes. To understand the data challenge posed by these large-scale survey telescopes, consider their output. The photograph itself is a negative; bright objects like nearby stars show as dark black spots while galaxies are fainter and fuzzier. A typical exposure recorded might contain as many as one million astronomical objects.Kibblewhite’s graduate advisor approved his plan and, for the next five years, he designed and built what eventually became the Automated Photographic Measuring facility (or APM). He estimated the initial cost at just under £33,000, a considerable sum in the late 1960s (and close to $800,000 in 2014...a substantial sum then and now). In developing his design, Kibblewhite looked to previous machines as something to improve upon. For example, astronomers had routinely built and used “measuring engines” since the 1950s. These instruments scanned photographs and electronically recorded their information such as the coordinates of stars and galaxies. Commercial firms like PerkinElmer eventually made “microphotometers” that allowed researchers to manually map the location of a star or galaxy on a photographic plate, measure its optical density, and convert it the signal into a value of the object’s actual brightness.

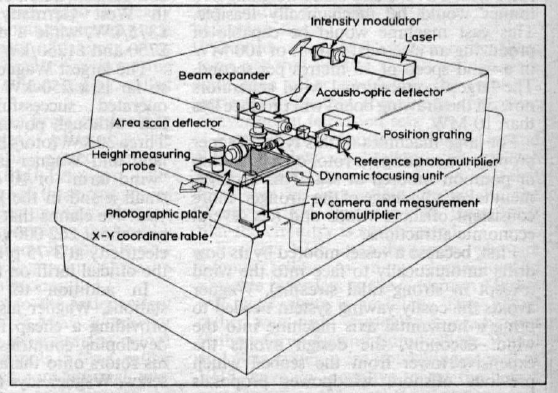

He estimated the initial cost at just under £33,000, a considerable sum in the late 1960s (and close to $800,000 in 2014...a substantial sum then and now). In developing his design, Kibblewhite looked to previous machines as something to improve upon. For example, astronomers had routinely built and used “measuring engines” since the 1950s. These instruments scanned photographs and electronically recorded their information such as the coordinates of stars and galaxies. Commercial firms like PerkinElmer eventually made “microphotometers” that allowed researchers to manually map the location of a star or galaxy on a photographic plate, measure its optical density, and convert it the signal into a value of the object’s actual brightness. Kibblewhite decided to use a very bright laser beam as a light source for his APM. When rapidly moved, the scanner could process an entire Schmidt plate with a million or so separate objects about a hundred times faster and do so automatically.For the actual image analysis, Kibblewhite received assistance from an unexpected source. In 1967, he met James Tucker, a cancer researcher at Cambridge’s Pathology Department, who was developing software to process images of cell nuclei. The ability to subtract the background as well as delineate the edges of “fuzzy” objects – cell nuclei or galaxies – was essential for both researchers and, when stained black, biological cells “looked just like star images." Kibblewhite’s machine adopted a variation of Tucker’s program for the APM. Data from it – object’s position, total brightness, and distribution of brightness across it – passed through a series of computers before being stored on magnetic tape.

Kibblewhite decided to use a very bright laser beam as a light source for his APM. When rapidly moved, the scanner could process an entire Schmidt plate with a million or so separate objects about a hundred times faster and do so automatically.For the actual image analysis, Kibblewhite received assistance from an unexpected source. In 1967, he met James Tucker, a cancer researcher at Cambridge’s Pathology Department, who was developing software to process images of cell nuclei. The ability to subtract the background as well as delineate the edges of “fuzzy” objects – cell nuclei or galaxies – was essential for both researchers and, when stained black, biological cells “looked just like star images." Kibblewhite’s machine adopted a variation of Tucker’s program for the APM. Data from it – object’s position, total brightness, and distribution of brightness across it – passed through a series of computers before being stored on magnetic tape. Kibblewhite continued to refine and improve the APM for years after its 1970 debut; a good description is in this 1981 paper. He described the machine as a “national facility” available to “astronomers from all over the world” who wanted to convert and analyze their photographic data. Scientists would come to Cambridge with their own astronomical photographs, and once the conversion was done – it took about 7 hours to convert a typical plate into about two billion digital pixels – the astronomer could then “walk away with his data and start working out what it all means.”Old rules of sharing and ownership still prevailed as converted data belonged to the individual scientist rather than going to a common repository for later use by another person. But these rules were gradually dissolving as data became digital. Besides fostering increased need for collaboration and an expanded professional skill set, the digital nature of astronomical data raised an increasingly important issue.As opposed to the physical artifacts that characterized the photographic era, once data was digital, it became more movable. Data could circulate. And data that was easier to circulate had the potential to disrupt longstanding community traditions and norms about ownership and access. Friction that stood in the way of sharing and collaboration was oiled and smoothed. But first one had to be able to share the data. Conversion was a critical first step in the process.

Kibblewhite continued to refine and improve the APM for years after its 1970 debut; a good description is in this 1981 paper. He described the machine as a “national facility” available to “astronomers from all over the world” who wanted to convert and analyze their photographic data. Scientists would come to Cambridge with their own astronomical photographs, and once the conversion was done – it took about 7 hours to convert a typical plate into about two billion digital pixels – the astronomer could then “walk away with his data and start working out what it all means.”Old rules of sharing and ownership still prevailed as converted data belonged to the individual scientist rather than going to a common repository for later use by another person. But these rules were gradually dissolving as data became digital. Besides fostering increased need for collaboration and an expanded professional skill set, the digital nature of astronomical data raised an increasingly important issue.As opposed to the physical artifacts that characterized the photographic era, once data was digital, it became more movable. Data could circulate. And data that was easier to circulate had the potential to disrupt longstanding community traditions and norms about ownership and access. Friction that stood in the way of sharing and collaboration was oiled and smoothed. But first one had to be able to share the data. Conversion was a critical first step in the process.