Checks and Stripes

Leaping Robot note: Historians, like most of us, like controversies. They provide a valuable lens to look at a range of issues. This post was inspired by an article that appeared recently in Science. It concerns a simmering feud between two groups of researchers over how to interpret some images of gold nano-particles made with a scanning tunneling microscope (STM). My colleague, Cyrus Mody, a historian of science at Rice University, wrote a prize-winning book called Instrumental Community that tells the story of the invention and spread of scanning probe microscopy. ((By forming a community, he argues, these researchers were able to innovate rapidly, share the microscopes with a wide range of users, and generate prestige (including the 1986 Nobel Prize in Physics) and profit (as the technology found applications in industry). Mody shows that both the technology of probe microscopy and the community model offered by the probe microscopists contributed to the development of political and scientific support for nanotechnology and the global funding initiatives that followed.)) So Cyrus is an excellent person to offer a more nuanced reading of this controversy. One might think that the nano-feud is, as one person notes, just a "minor storm in a nano teapot." Mody's guest blog post goes deeper than this and shows how controversies like this, besides being rather common, also tell us something important about how scientists (and science journalists) communicate with each other and the public. Here's Cyrus...

Leaping Robot note: Historians, like most of us, like controversies. They provide a valuable lens to look at a range of issues. This post was inspired by an article that appeared recently in Science. It concerns a simmering feud between two groups of researchers over how to interpret some images of gold nano-particles made with a scanning tunneling microscope (STM). My colleague, Cyrus Mody, a historian of science at Rice University, wrote a prize-winning book called Instrumental Community that tells the story of the invention and spread of scanning probe microscopy. ((By forming a community, he argues, these researchers were able to innovate rapidly, share the microscopes with a wide range of users, and generate prestige (including the 1986 Nobel Prize in Physics) and profit (as the technology found applications in industry). Mody shows that both the technology of probe microscopy and the community model offered by the probe microscopists contributed to the development of political and scientific support for nanotechnology and the global funding initiatives that followed.)) So Cyrus is an excellent person to offer a more nuanced reading of this controversy. One might think that the nano-feud is, as one person notes, just a "minor storm in a nano teapot." Mody's guest blog post goes deeper than this and shows how controversies like this, besides being rather common, also tell us something important about how scientists (and science journalists) communicate with each other and the public. Here's Cyrus...

***

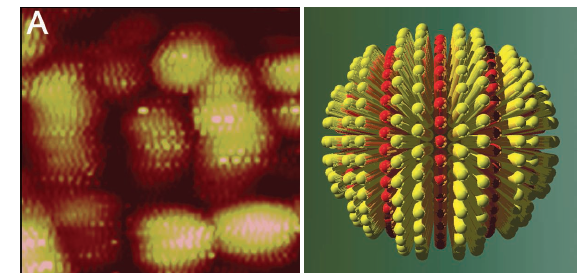

The January 24 issue of Science has a news item by Robert Service with the juicy title “Nano-Imaging Feud Sets Online Sites Sizzling” describing a multiyear tussle over a decade’s worth of science based on some scanning tunneling microscope (STM) images of gold nanoparticles. The STM images are from Francesco Stellacci’s group, first at MIT then at the Swiss Federal Institute of Technology in Lausanne. They purport to show “stripes” of organic molecules attached to particles with diameters of 20 nanometers or less, in an arrangement resembling lines of latitude. However, a number of critics have insisted – in blogs, Twitter feeds, and elsewhere – that Stellacci’s stripes are actually instrumental artifacts.

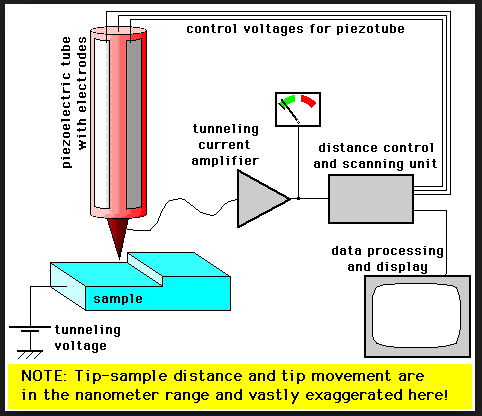

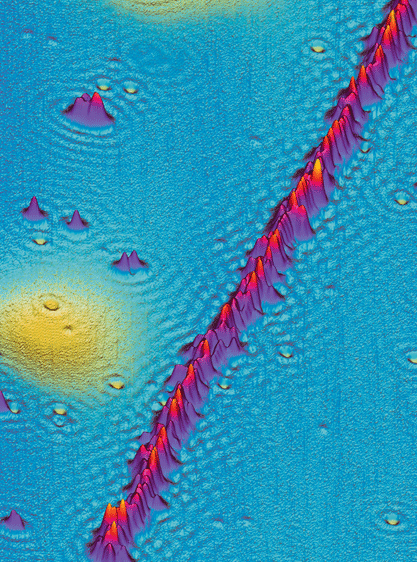

I’m not going to weigh in on whether Stellacci’s stripes are real or not. I know some very smart STMers on either side, so I doubt consensus will be reached soon. Instead, let me do what historians of science usually do: put controversial research in perspective, followed by a point for the prosecution and a point for the defense.Controversies like this are pretty common. The point of doing forefront research is to push our ability to make and measure stuff right to the limits. Robert Service has made a career out of reporting such disputes, for which historians of science should be grateful. For instance, I’ve made frequent use of his article on a related dispute from 2003, “Molecular Electronics – Next Generation Technology Hits an Early Mid-Life Crisis.” If you look at the history of science you’ll find lots of disputes, sometimes extending over decades, over matters of fact that you would think could be easily resolved. One of my favorite examples is the more than thirty year debate from the early 1920s to the late 1950s in which cytogeneticists nearly uniformly agreed that a normal human somatic cell contains 48 chromosomes (rather than the now accepted 46). ((described in Aryn Martin, “Can’t Any Body Count? Counting as an Epistemic Theme in the History of Human Chromosomes,” Social Studies of Science 2004)) How hard can it be to count chromosomes that are almost a thousand times larger than Stellacci’s nanoparticles? And yet, even something as simple as counting can remain interdeterminate (or determined but incorrect) for a very long time.The instrument Stellacci used to image his nanoparticles, the scanning tunneling microscope, has a particularly rich history of disputes. STM images are made by bringing a sharp metal probe very close to the surface being imaged while maintaining a voltage difference between the probe and the sample. This encourages some electrons to “tunnel” from the sample to the probe and vice versa. Generally, the closer the probe is to the surface the higher the probability of tunneling in one direction rather than the other, so the number of tunneling electrons is a reasonable proxy for the z-height of the sample for any given x-y position of the probe. As you move the probe around in x and y, you build up a matrix of z values for the strength of the tunnel current, which you can then convert into a three-dimensional image of the sample. Roughly, the computer to which the STM is hooked up registers a single value for the tunnel current per increment of probe position. That is, the STM periodically samples the sample, and each sampled value is fed both to the imaging output and to the circuit controlling the height of the probe at the next increment. Too strong a feedback can make the probe constantly overshoot and then play catch-up, so even a smooth surface will seem to have undulations. Unfortunately, the phenomena researchers are looking for on a surface are also often periodic in nature – as, for instance, are Stellacci’s stripes. If you think you’ve made a sample with stripy features, you want to get an STM image with alternating patches of light and dark, up and down. A nice analogy, suggested to me by my Rice colleague Kevin Kelly, is of a strobe light illuminating water coming out of a tap. If the strobe samples the water at the right rate, we see a series of drops accelerating under the force of gravity. If the strobe frequency and duration are varied, though, we might see a smooth flow of water or we might see water drops that appear to move upward into the tap.So it’s not hard to find people who are skeptical of claims that a particular STM image indicates the existence of some periodic nanostructure, particularly if the distance between periodic features is near the limits of the instrument’s resolution. Perhaps the most famous such case involved STM images of DNA made in the late 1980s and early 1990s.

Roughly, the computer to which the STM is hooked up registers a single value for the tunnel current per increment of probe position. That is, the STM periodically samples the sample, and each sampled value is fed both to the imaging output and to the circuit controlling the height of the probe at the next increment. Too strong a feedback can make the probe constantly overshoot and then play catch-up, so even a smooth surface will seem to have undulations. Unfortunately, the phenomena researchers are looking for on a surface are also often periodic in nature – as, for instance, are Stellacci’s stripes. If you think you’ve made a sample with stripy features, you want to get an STM image with alternating patches of light and dark, up and down. A nice analogy, suggested to me by my Rice colleague Kevin Kelly, is of a strobe light illuminating water coming out of a tap. If the strobe samples the water at the right rate, we see a series of drops accelerating under the force of gravity. If the strobe frequency and duration are varied, though, we might see a smooth flow of water or we might see water drops that appear to move upward into the tap.So it’s not hard to find people who are skeptical of claims that a particular STM image indicates the existence of some periodic nanostructure, particularly if the distance between periodic features is near the limits of the instrument’s resolution. Perhaps the most famous such case involved STM images of DNA made in the late 1980s and early 1990s.

At the time, many STMers harbored hopes that their instrument could resolve the base pairs in a strand of DNA and might therefore be used in genetic sequencing. Lots of STMers tried to image DNA; several groups published such images; one Caltech group even managed to get an atomic resolution image of DNA on the cover of Nature that appeared to show a helix with the right pitch distance (the linear distance between two turns of the helix). And yet, there were lots of good reasons to think that an organic molecule like DNA should be difficult to image via electron tunneling. Whispers began to circulate that some of the best images of “DNA” were actually images of complex defects in a graphite substrate that might or might not have any DNA deposited on it. In the end, images such as the 1990 Nature cover were never definitively disproved, but the uncertainties surrounding their validity became so insurmountable that almost everyone moved away from trying to image DNA with an STM. In the late ‘90s and early 2000s, the three or four remaining groups showed that DNA could be imaged, but only under very specific and difficult conditions completely unlike those used in the early ‘90s, conditions that, so far, have barred using an STM for genetic sequencing.

The STM of DNA case, then, leads to my two points about Stellacci’s stripes. My point for the defense is that even a result that turns out, after vigorous debate, to be wrong can be extraordinarily productive. Lots of people got into STM on the basis of those images of “DNA”, even if it’s still uncertain whether there was actually any DNA there. The debate about those images led to a general tightening of standards for STM image production and interpretation, and a better understanding of which applications STM was and was not good for. The DNA boom provided an early market for commercial STMs that gave manufacturers the revenue to build better versions that could be used in more appropriate ways. Since the vast majority of scientific findings are ignored, a finding that turns out to be questionable but which is actually taken up for productive debate is doing pretty well. I don’t have the expertise to say whether Stellacci is correct or not, but even if the consensus emerges that he is wrong, it looks to me like he and his skeptics will still have managed to move the field forward, not back. (Conversely, if his skeptics turn out to be wrong, they also will still have done the field – and Stellacci himself – a great service).My point for the prosecution is that some of the criticism of Stellacci’s skeptics’ methods is misplaced. Service’s article offers a number of quotes from Stellacci and his allies complaining that the skeptics have used extrascientific means to carry out the debate – that they have resorted to blog posts and non-peer-reviewed articles instead of remaining within the arena of peer-reviewed journals. That’s a rather ahistorical view of how scientific controversies proceed. Peer reviewed journals are, of course, an important mechanism for fostering the validity of scientific contributions, but we all know that peer review is slow and hardly error-free. Often, peer-reviewed articles only make sense within some ecology of other forms of communication. ((For a particularly engaging article on this topic with a self-explanatory title, see Bruce Lewenstein, “From Fax to Facts: Communication in the Cold Fusion Saga,” Social Studies of Science (1995).)) In the "STM of DNA" controversy, the thread of argument left quite a light footprint in peer-reviewed articles – it’s difficult to piece together, just from published texts, who said what when, much less when and why various actors changed their minds about STM of DNA. Most of the influential voices used conference presentations and post-presentation conversations to persuade themselves, each other, and the rest of the community that there were serious problems with STM of DNA. Some of the forms of communication used by Stellacci’s skeptics today (blogs and Twitter) didn’t exist back then, but if they had you can bet they would’ve been used too. If history is anything to go by (and I hope it is!) there’s nothing inherently unscientific about using any mode of communication you can to get your point across.